Background

This blog provides an overview of bias in AI systems, focusing on its various forms, sources, and methods for detection. It emphasizes the importance of identifying and mitigating bias to ensure fairness, accuracy, and ethical AI development and deployment.

Introduction

AI systems are increasingly integrated into various aspects of our lives, from healthcare and finance to criminal justice and education. However, these systems are not inherently neutral; they can perpetuate and even amplify existing societal biases. Bias in AI refers to systematic errors in AI outputs that disproportionately favor or disfavor certain groups of people. These biases can lead to unfair or discriminatory outcomes, undermining the potential benefits of AI and eroding public trust.

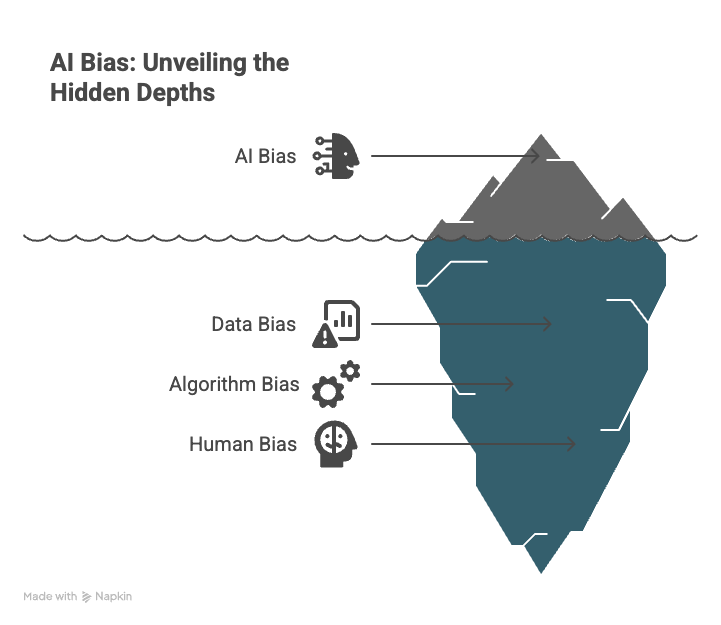

Types of Bias in AI

Several types of bias can affect AI systems, each arising from different sources and manifesting in distinct ways:

1. Data Bias

Data bias is one of the most common and significant sources of bias in AI. It occurs when the data used to train an AI model is not representative of the population it is intended to serve.

- Sampling Bias: This occurs when the training data is collected in a way that systematically excludes or underrepresents certain groups. For example, if a facial recognition system is trained primarily on images of light-skinned individuals, it may perform poorly on individuals with darker skin tones.

- Historical Bias: This reflects existing societal biases present in historical data. For instance, if historical hiring data shows a preference for male candidates, an AI system trained on this data may perpetuate gender bias in hiring decisions.

- Representation Bias: This arises when certain groups are not adequately represented in the training data, leading to poor performance for those groups. This can occur due to data scarcity or limitations in data collection methods.

- Measurement Bias: This occurs when the data used to train the model is collected or measured in a way that systematically favors or disfavors certain groups. For example, if a survey question is worded in a way that is more likely to elicit a positive response from one group than another, the resulting data will be biased.

2. Algorithm Bias

Algorithm bias refers to biases that arise from the design or implementation of the AI algorithm itself.

- Selection Bias: This occurs when the algorithm is designed to prioritize certain features or variables that are correlated with protected attributes, such as race or gender.

- Optimization Bias: This arises when the algorithm is optimized to perform well on the majority group, potentially at the expense of performance on minority groups.

- Evaluation Bias: This occurs when the metrics used to evaluate the performance of the AI system are biased, leading to an inaccurate assessment of its fairness.

3. Human Bias

Human bias refers to biases introduced by the humans involved in the design, development, and deployment of AI systems.

- Confirmation Bias: This occurs when developers unconsciously seek out or interpret data in a way that confirms their existing beliefs or biases.

- Anchoring Bias: This occurs when developers rely too heavily on initial information or assumptions, even when that information is inaccurate or irrelevant.

- Availability Bias: This occurs when developers overestimate the importance of information that is easily available or memorable, leading to biased decisions.

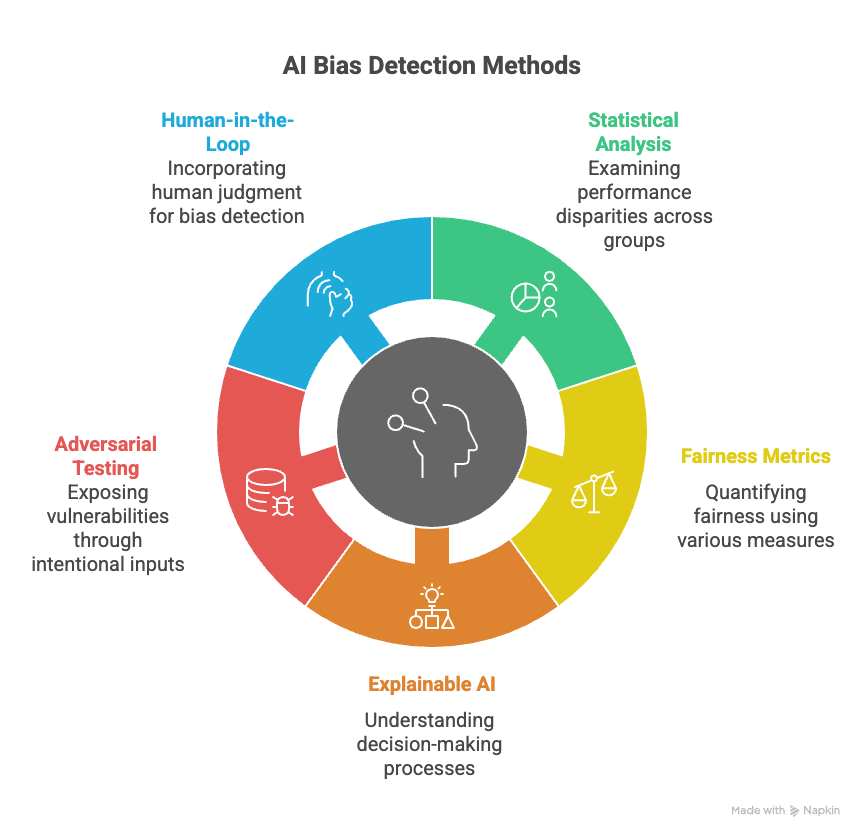

Methods for Detecting Bias in AI

Detecting bias in AI systems is a critical step in ensuring fairness and accuracy. Several methods can be used to identify and quantify bias:

1. Statistical Analysis

Statistical analysis involves examining the performance of the AI system across different demographic groups to identify disparities.

- Disparate Impact Analysis: This involves comparing the outcomes of the AI system for different groups to determine if there is a statistically significant difference. The “80% rule” is often used as a guideline, where a selection rate for a protected group that is less than 80% of the selection rate for the majority group is considered evidence of disparate impact.

- Demographic Parity: This measures whether the AI system produces similar outcomes for different demographic groups, regardless of their qualifications.

- Equal Opportunity: This measures whether the AI system has similar true positive rates for different demographic groups.

- Predictive Parity: This measures whether the AI system has similar positive predictive values for different demographic groups.

2. Fairness Metrics

Fairness metrics are quantitative measures used to assess the fairness of AI systems. Several fairness metrics have been developed, each with its own strengths and limitations.

- Statistical Parity Difference: This measures the difference in the proportion of positive outcomes between different groups.

- Equal Opportunity Difference: This measures the difference in the true positive rates between different groups.

- Average Odds Difference: This measures the average of the absolute differences in the false positive rates and false negative rates between different groups.

3. Explainable AI (XAI) Techniques

Explainable AI (XAI) techniques can be used to understand how an AI system makes decisions and identify potential sources of bias.

- Feature Importance Analysis: This involves identifying the features that have the greatest influence on the AI system’s predictions. If certain features that are correlated with protected attributes are found to be highly influential, this may indicate bias.

- Decision Tree Visualization: This involves visualizing the decision-making process of the AI system using decision trees. This can help identify patterns or rules that may be biased.

- SHAP (SHapley Additive exPlanations) Values: SHAP values provide a way to explain the contribution of each feature to the AI system’s predictions for individual instances. This can help identify instances where the AI system is making biased predictions.

4. Adversarial Testing

Adversarial testing involves intentionally creating inputs that are designed to expose vulnerabilities or biases in the AI system.

- Data Poisoning: This involves injecting biased or malicious data into the training data to manipulate the AI system’s behavior.

- Adversarial Examples: This involves creating inputs that are slightly modified to cause the AI system to make incorrect predictions.

5. Human-in-the-Loop Evaluation

Human-in-the-loop evaluation involves incorporating human judgment into the evaluation process to identify potential biases that may not be detected by automated methods.

- Bias Audits: This involves having a diverse group of individuals review the AI system’s outputs and identify potential biases.

- User Feedback: This involves collecting feedback from users about their experiences with the AI system to identify potential biases.

Mitigation Strategies

Once bias has been detected, several strategies can be used to mitigate its impact:

- Data Augmentation: This involves adding more data to the training set to improve the representation of underrepresented groups.

- Re-weighting: This involves assigning different weights to different instances in the training data to compensate for imbalances.

- Bias Correction Algorithms: These are algorithms specifically designed to remove bias from AI systems.

- Regularization: This involves adding constraints to the AI model to prevent it from overfitting to biased data.

- Fairness-Aware Training: This involves training the AI model to explicitly optimize for fairness metrics.

Conclusion

Detecting and mitigating bias in AI systems is essential for ensuring fairness, accuracy, and ethical AI development. By understanding the different types of bias, employing appropriate detection methods, and implementing effective mitigation strategies, we can work towards creating AI systems that benefit all members of society. Continuous monitoring and evaluation are crucial to maintain fairness and address new biases that may emerge over time.