Background

This blog provides an overview of the security threats facing Artificial Intelligence (AI) systems and outlines potential mitigation strategies. As AI becomes increasingly integrated into critical infrastructure and decision-making processes, it is crucial to understand and address the unique security challenges it presents. This blog explores adversarial attacks, data poisoning, model stealing, and other vulnerabilities, offering insights into how to protect AI systems from malicious actors and ensure their reliability and trustworthiness.

Introduction

Artificial Intelligence (AI) is rapidly transforming various aspects of our lives, from healthcare and finance to transportation and security. As AI systems become more sophisticated and integrated into critical infrastructure, they also become attractive targets for malicious actors. Securing AI systems is essential to ensure their reliability, trustworthiness, and safety. This blog outlines the key threats facing AI and discusses potential mitigation strategies.

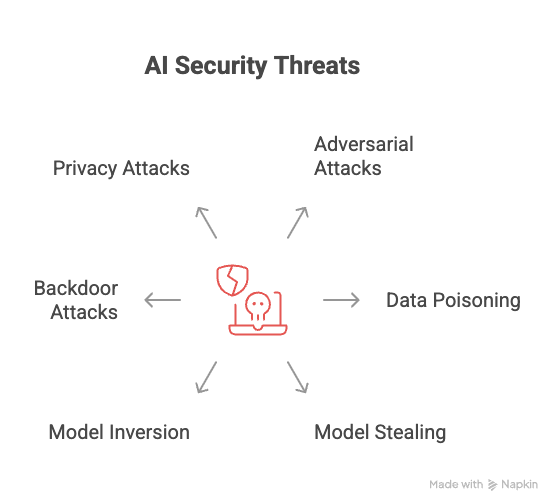

Types of Threats to AI Systems

AI systems are vulnerable to a range of security threats, including:

1. Adversarial Attacks

Adversarial attacks involve crafting subtle, often imperceptible, perturbations to input data that cause AI models to make incorrect predictions. These attacks can be categorized as:

- Evasion Attacks: These attacks occur during the deployment phase, where an attacker modifies input data to evade detection or classification by a trained model. For example, adding a small amount of noise to an image can cause an image recognition system to misclassify it.

- Poisoning Attacks: These attacks occur during the training phase, where an attacker injects malicious data into the training dataset to corrupt the model’s learning process. This can lead to the model making incorrect predictions on legitimate data in the future.

2. Data Poisoning

Data poisoning attacks involve injecting malicious data into the training dataset to corrupt the model’s learning process. This can lead to the model making incorrect predictions on legitimate data in the future. Data poisoning attacks can be targeted or untargeted:

- Targeted Poisoning: The attacker aims to cause the model to misclassify specific inputs in a desired way.

- Untargeted Poisoning: The attacker aims to degrade the overall performance of the model without targeting specific inputs.

3. Model Stealing

Model stealing attacks involve an attacker attempting to replicate or extract information about a trained AI model without having access to the model’s parameters or training data. This can be achieved through:

- Querying the Model: The attacker repeatedly queries the model with different inputs and observes the outputs to infer the model’s architecture, parameters, or decision boundaries.

- Reverse Engineering: The attacker analyzes the model’s behavior and outputs to reconstruct its internal workings.

4. Model Inversion

Model inversion attacks aim to reconstruct sensitive information about the training data from a trained AI model. This can be achieved by:

- Reconstructing Input Data: The attacker attempts to reconstruct the original input data used to train the model by analyzing the model’s parameters or outputs.

- Inferring Sensitive Attributes: The attacker attempts to infer sensitive attributes about the training data, such as demographic information or medical conditions, by analyzing the model’s behavior.

5. Backdoor Attacks

Backdoor attacks involve injecting a hidden trigger into a model during training, which causes the model to misbehave when the trigger is present in the input data. The trigger is designed to be inconspicuous and only activated under specific conditions.

6. Privacy Attacks

AI systems that process sensitive data are vulnerable to privacy attacks, which aim to extract or infer private information about individuals from the model or its outputs. Examples include:

- Membership Inference Attacks: Determining whether a specific data point was used to train the model.

- Attribute Inference Attacks: Inferring sensitive attributes about individuals based on their data.

Mitigation Strategies

To protect AI systems from these threats, a multi-layered approach is required, including:

1. Adversarial Defense Techniques

- Adversarial Training: Training the model on adversarial examples to make it more robust to adversarial attacks.

- Input Preprocessing: Applying techniques such as input sanitization, feature squeezing, and random transformations to reduce the effectiveness of adversarial perturbations.

- Defensive Distillation: Training a new model using the outputs of a robust model as training data.

- Gradient Masking: Obfuscating the gradients of the model to make it more difficult for attackers to craft adversarial examples.

2. Data Poisoning Defense Techniques

- Data Sanitization: Filtering and cleaning the training data to remove potentially malicious or corrupted samples.

- Anomaly Detection: Identifying and removing anomalous data points that may be indicative of poisoning attacks.

- Robust Aggregation: Using robust statistical methods to aggregate training data and mitigate the impact of poisoned samples.

- Input Validation: Validating the integrity and authenticity of input data before using it to train the model.

3. Model Protection Techniques

- Access Control: Restricting access to the model and its parameters to authorized personnel only.

- Model Obfuscation: Applying techniques such as model compression, quantization, and encryption to make it more difficult for attackers to steal or reverse engineer the model.

- Watermarking: Embedding a unique identifier into the model to detect unauthorized copies or modifications.

- Differential Privacy: Adding noise to the model’s outputs to protect the privacy of the training data.

4. Monitoring and Detection

- Anomaly Detection: Monitoring the model’s performance and behavior to detect anomalies that may be indicative of an attack.

- Intrusion Detection Systems: Implementing intrusion detection systems to detect and respond to malicious activity targeting the AI system.

- Logging and Auditing: Logging all interactions with the AI system and auditing the logs to identify suspicious activity.’

5. Security Best Practices

- Secure Development Lifecycle: Incorporating security considerations into all stages of the AI system development lifecycle.

- Regular Security Audits: Conducting regular security audits to identify and address vulnerabilities in the AI system.

- Incident Response Plan: Developing an incident response plan to handle security incidents and breaches.

- Employee Training: Training employees on security best practices and the risks associated with AI systems.

Conclusion

Securing AI systems is a complex and evolving challenge. As AI becomes more prevalent, it is crucial to understand the threats it faces and implement appropriate mitigation strategies. By adopting a multi-layered approach that includes adversarial defense techniques, data poisoning defense techniques, model protection techniques, monitoring and detection, and security best practices, we can protect AI systems from malicious actors and ensure their reliability, trustworthiness, and safety. Continuous research and development are needed to stay ahead of emerging threats and develop more effective security measures for AI systems.